Table of Links

-

Experiments

5 Related Work

Parameter-Efficient Tuning of PLMs. As it is increasingly infeasible to train and store full copies of large PLMs for various downstream tasks, how to efficiently tune the PLMs with few trainable parameters becomes critical. Existing PELT methods can be largely divided into two categories based on whether new trainable parameters are introduced. Specifically, one may either train a subset of the model parameters such as the prediction head (Lee et al., 2019) and bias terms (Ben Zaken et al., 2021), or introduce task-specific parameters to different parts of the PLM such as before multi-head attention (Li and Liang, 2021) or after feedforward layer (Houlsby et al., 2019). As the number of PELT methods keeps increasing, the purpose of UNIPELT is to better understand and leverage the distinctions of various methods instead of proposing yet another method.

Mixture-of-Experts. UNIPELT is also related to approaches that involve a high-capacity network and activate (upweight) different parts of the network given different inputs. One notable example is Mixture-of-Experts (MoE) (Jacobs et al., 1991; Shazeer et al., 2017), which maintains a set of experts (neural networks) and one or more trainable gates that select a combination of the experts specific to each input. Despite being conceptually similar, UNIPELT is different from MoE: the submodules in UNIPELT are not combined explicitly by summation like MoE but in sequential order and affect each other implicitly. Moreover, the “experts” are diverse in UNIPELT while usually homogeneous or identical in MoE methods.

6 Conclusion

In this paper, we present a comprehensive study of representative parameter-efficient language model tuning (PELT) methods and propose a unified framework, which incorporates different PELT methods as submodules and learns to activate the most appropriate submodules for a given task or data setup. Our proposed framework consistently outperforms conventional fine-tuning as well as the submodules that it incorporates under different setups, and generally surpasses the upper bound that takes the best performance of each submodule used individually on each task. Our findings suggest that a mixture of multiple PELT methods that involve different parts of the PLM may be favorable regarding both model effectiveness and robustness. For future work, we will try to better understand the discrepancy of various PELT methods in different scenarios. We also plan to investigate a multi-task setting where multiple submodules can be activated and cooperate at the task level.

Acknowledgements

We thank Xiang Lisa Li, Hai Ye, Rabeeh Karimi Mahabadi, Junxian He, Yiqing Xie, Yaqing Wang, and Liyuan Liu for helpful discussions and feedback. We thank anonymous reviewers for valuable comments and suggestions.

References

Elad Ben Zaken, Shauli Ravfogel, and Yoav Goldberg. 2021. Bitfit: Simple parameter-efficient fine-tuning for transformer-based masked language-models. arXiv e-prints, pages arXiv–2106.

Tom B Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, et al. 2020. Language models are few-shot learners. arXiv preprint arXiv:2005.14165.

Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pages 4171–4186, Minneapolis, Minnesota. Association for Computational Linguistics.

Ning Ding and Shengding Hu. 2021. Must-read papers on prompt-based tuning for pre-trained language models. https://github.com/ thunlp/PromptPapers.

Tianyu Gao, Adam Fisch, and Danqi Chen. 2021. Making pre-trained language models better few-shot learners. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 3816–3830, Online. Association for Computational Linguistics.

Yuxian Gu, Xu Han, Zhiyuan Liu, and Minlie Huang. 2021. Ppt: Pre-trained prompt tuning for few-shot learning. arXiv preprint arXiv:2109.04332.

Demi Guo, Alexander Rush, and Yoon Kim. 2021. Parameter-efficient transfer learning with diff pruning. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 4884–4896, Online. Association for Computational Linguistics.

Ruidan He, Linlin Liu, Hai Ye, Qingyu Tan, Bosheng Ding, Liying Cheng, Jiawei Low, Lidong Bing, and Luo Si. 2021. On the effectiveness of adapterbased tuning for pretrained language model adaptation. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 2208–2222, Online. Association for Computational Linguistics.

Neil Houlsby, Andrei Giurgiu, Stanislaw Jastrzebski, Bruna Morrone, Quentin De Laroussilhe, Andrea Gesmundo, Mona Attariyan, and Sylvain Gelly. 2019. Parameter-efficient transfer learning for nlp. In International Conference on Machine Learning, pages 2790–2799. PMLR.

Edward J Hu, Yelong Shen, Phillip Wallis, Zeyuan Allen-Zhu, Yuanzhi Li, Shean Wang, and Weizhu Chen. 2021. Lora: Low-rank adaptation of large language models. arXiv preprint arXiv:2106.09685.

Robert A Jacobs, Michael I Jordan, Steven J Nowlan, and Geoffrey E Hinton. 1991. Adaptive mixtures of local experts. Neural computation, 3(1):79–87.

Rabeeh Karimi Mahabadi, James Henderson, and Sebastian Ruder. 2021a. Compacter: Efficient lowrank hypercomplex adapter layers. arXiv preprint arXiv:2106.04647.

Rabeeh Karimi Mahabadi, Sebastian Ruder, Mostafa Dehghani, and James Henderson. 2021b. Parameterefficient multi-task fine-tuning for transformers via shared hypernetworks. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 565–576, Online. Association for Computational Linguistics.

Jaejun Lee, Raphael Tang, and Jimmy Lin. 2019. What would elsa do? freezing layers during transformer fine-tuning. arXiv preprint arXiv:1911.03090.

Brian Lester, Rami Al-Rfou, and Noah Constant. 2021. The power of scale for parameter-efficient prompt tuning. arXiv preprint arXiv:2104.08691.

Mike Lewis, Yinhan Liu, Naman Goyal, Marjan Ghazvininejad, Abdelrahman Mohamed, Omer Levy, Veselin Stoyanov, and Luke Zettlemoyer. 2020. BART: Denoising sequence-to-sequence pretraining for natural language generation, translation, and comprehension. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pages 7871–7880, Online. Association for Computational Linguistics.

Xiang Lisa Li and Percy Liang. 2021. Prefix-tuning: Optimizing continuous prompts for generation. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 4582–4597, Online. Association for Computational Linguistics.

Jonas Pfeiffer, Aishwarya Kamath, Andreas Rücklé, Kyunghyun Cho, and Iryna Gurevych. 2021. AdapterFusion: Non-destructive task composition for transfer learning. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, pages 487–503, Online. Association for Computational Linguistics.

Jonas Pfeiffer, Andreas Rücklé, Clifton Poth, Aishwarya Kamath, Ivan Vulic, Sebastian Ruder, ´ Kyunghyun Cho, and Iryna Gurevych. 2020a. Adapterhub: A framework for adapting transformers. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP 2020): Systems Demonstrations, pages 46– 54, Online. Association for Computational Linguistics.

Jonas Pfeiffer, Ivan Vulic, Iryna Gurevych, and Se- ´ bastian Ruder. 2020b. MAD-X: An Adapter-Based Framework for Multi-Task Cross-Lingual Transfer. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), pages 7654–7673, Online. Association for Computational Linguistics.

Timo Schick and Hinrich Schütze. 2021. Exploiting cloze-questions for few-shot text classification and natural language inference. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, pages 255–269, Online. Association for Computational Linguistics.

Noam Shazeer, Azalia Mirhoseini, Krzysztof Maziarz, Andy Davis, Quoc Le, Geoffrey Hinton, and Jeff Dean. 2017. Outrageously large neural networks: The sparsely-gated mixture-of-experts layer. arXiv preprint arXiv:1701.06538.

Alex Wang, Amanpreet Singh, Julian Michael, Felix Hill, Omer Levy, and Samuel R Bowman. 2019. Glue: A multi-task benchmark and analysis platform for natural language understanding. In International Conference on Learning Representations

Thomas Wolf, Lysandre Debut, Victor Sanh, Julien Chaumond, Clement Delangue, Anthony Moi, Pierric Cistac, Tim Rault, Rémi Louf, Morgan Funtowicz, et al. 2019. Huggingface’s transformers: State-of-the-art natural language processing. arXiv preprint arXiv:1910.03771.

Thomas Wolf, Lysandre Debut, Victor Sanh, Julien Chaumond, Clement Delangue, Anthony Moi, Pierric Cistac, Tim Rault, Rémi Louf, Morgan Funtowicz, Joe Davison, Sam Shleifer, Patrick von Platen, Clara Ma, Yacine Jernite, Julien Plu, Canwen Xu, Teven Le Scao, Sylvain Gugger, Mariama Drame, Quentin Lhoest, and Alexander M. Rush. 2020. How to benchmark transformer models. https://huggingface.co/ transformers/benchmarks.html.

Jeffrey O Zhang, Alexander Sax, Amir Zamir, Leonidas Guibas, and Jitendra Malik. 2020. Sidetuning: A baseline for network adaptation via additive side networks. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part III 16, pages 698–714. Springer.

A Prefix-tuning vs. Prompt-based Fine-tuning

We note that prefix-tuning (or prompt-tuning) is different from prompt-based fine-tuning methods (Schick and Schütze, 2021; Gao et al., 2021) in many ways: (1) Prompt-based fine-tuning is not parameter-efficient as it updates all model parameters while prefix-tuning only updates the prefix matrix P . (2) The prompts are only used in model input for prompt-based fine-tuning but added to every Transformer layer in prefix-tuning (stored as different vectors). (3) Prompt-based fine-tuning typically leverages carefully designed natural language prompts while prefix-tuning uses continuous prompts (virtual tokens).

B Implementation Details

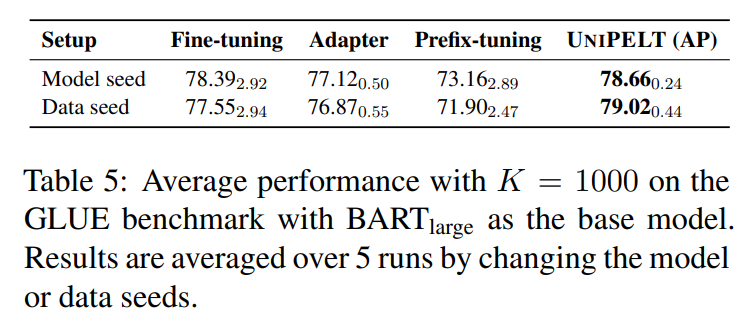

Data Preparation. We shuffle the training set with seed s, take the first K samples as the new training set, and the next 1,000 samples as the development set. We use s = {111, 222, 333, 444, 555} as the data seeds and the same seed (s = 42) for model training. We also conduct another set of preliminary experiments by fixing the data and using 5 different random seeds for model training, the results of which are similar (Table 5).

Hyperparameters. We adopt AdapterHub (Pfeiffer et al., 2020a), a library based on HuggingFace Transformers (Wolf et al., 2019), as our codebase. We largely follow the recommended hyperparameters used in different methods for a fair comparison. We set the input length to 128 and the training batch size to 16. We set the number of epochs to 50 and adopt early stopping with a patience of 10 non-increasing epochs. We set the learning rate of fine-tuning and adapter to 2e-5 and 1e-4 according to the findings in prior studies (Pfeiffer et al., 2020a; He et al., 2021). For prefix-tuning and UNIPELT, as they are not previously evaluated on NLU tasks, we tune their learning rates from {1e-4, 2e-4, 5e-4} on the development set and set to 2e-4 and 5e-4, respectively. For BitFit and LoRA, we choose the learning rates commonly used in their own experiments (1e-4 and 5e-4, respectively). We set α = 2 and r = 8 in LoRA according to its official scripts

C BART Results

D Detailed Performance

In Table 6, we list the detailed results on both development and test sets of the GLUE benchmark. The observations and findings are largely consistent on the two evaluation splits.

Authors:

(1) Yuning Mao, University of Illinois Urbana-Champaign and the work was done during internship at Meta AI ([email protected]);

(2) Lambert Mathias, Meta AI ([email protected]);

(3) Rui Hou, Meta AI ([email protected]);

(4) Amjad Almahairi, Meta AI ([email protected]);

(5) Hao Ma, Meta AI ([email protected]);

(6) Jiawei Han, University of Illinois Urbana-Champaign ([email protected]);

(7) Wen-tau Yih, Meta AI ([email protected]);

(8) Madian Khabsa, Meta AI ([email protected]).

This paper is